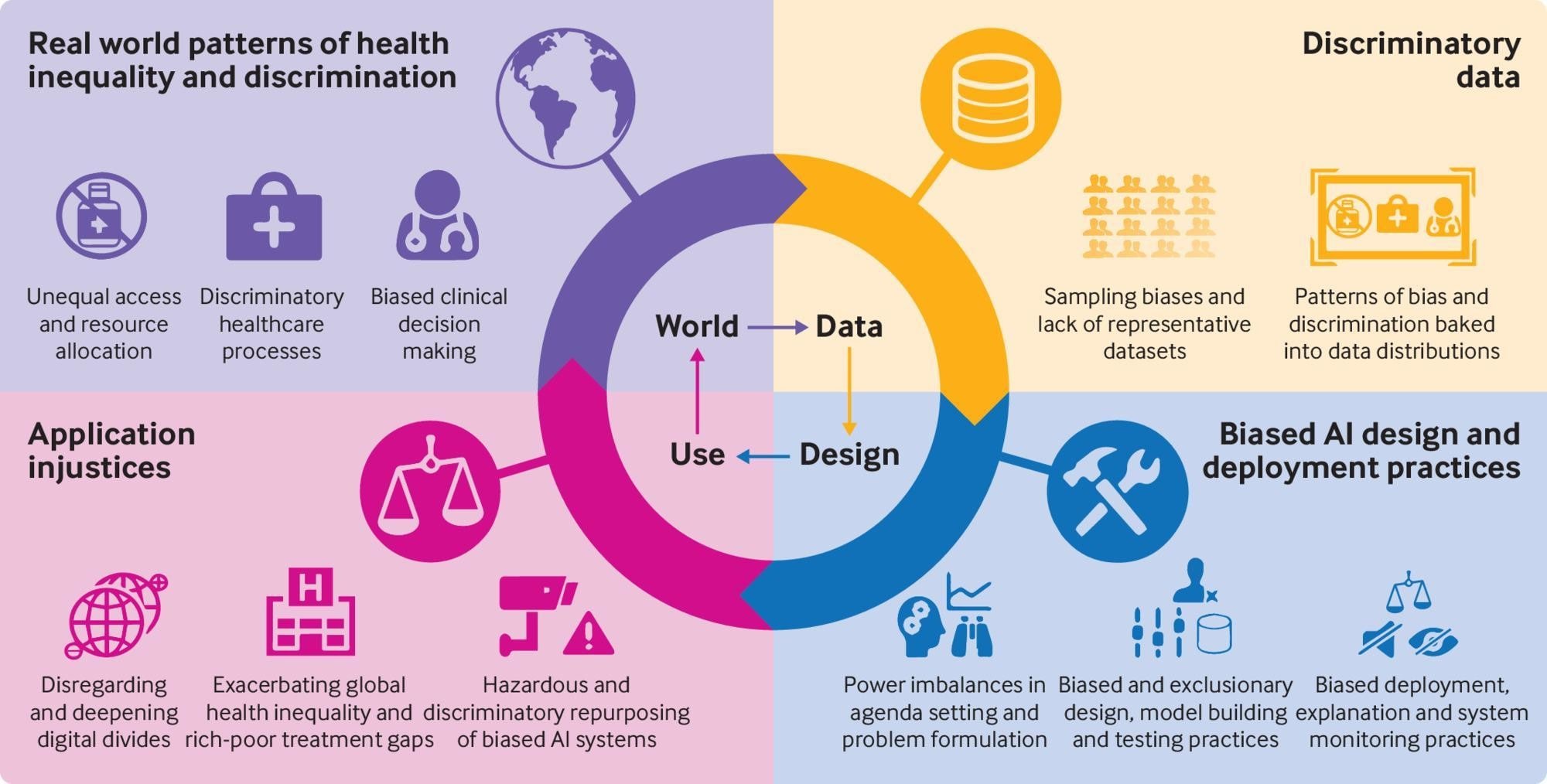

Bias in AI output refers to the presence of systematic prejudices or inaccuracies in the results generated by artificial intelligence algorithms. This bias can stem from various sources within the AI development process, leading to unfair, discriminatory, or skewed outcomes.

There are several types of bias that can manifest in AI output:

- Selection Bias: Arises when training data is not representative of the reality it aims to model, leading to unrepresentative datasets and biased outputs

- Confirmation Bias: Occurs when AI systems rely excessively on pre-existing beliefs or trends in data, reinforcing existing biases and hindering the identification of new patterns

- Measurement Bias: Arises when collected data systematically differs from the actual variables of interest, impacting the accuracy and reliability of AI predictions

- Stereotyping Bias: Reinforces harmful stereotypes, such as inaccuracies in facial recognition systems or language translation tools

To ensure that any AI does not have any bias, several measures can be wilfully and consciously implemented:

-

Transparency:

Ensure that the said AI should maintain transparency in its operations,

algorithms, and decision-making processes. By providing clear

information on how the AI functions and how results are generated, users

can better understand the system's workings

-

Diverse Data Sources:

Utilizing a wide range of data sources helps mitigate bias by offering a

more comprehensive view of information. When diverse

perspectives and sources are incorporated, the AI can reduce the risk of bias in

its responses

-

Regular Audits:

The organisation needs to conduct regular audits and evaluations of the AI system that can help

identify and address any biases that may exist. Continuously

monitoring and reviewing the AI's performance, are the only ways by which biases can be detected

and rectified promptly

-

Ethical Guidelines:

Adhering to ethical guidelines and standards in AI development is

crucial for ensuring fairness and impartiality. Following ethical

principles and guidelines in the training of the AI system, AI can uphold integrity and

minimize bias in its operations

- User Feedback: Encouraging user feedback and actively seeking input from users can help identify potential biases or issues within the system. When the interaction with the end user is strong by incorporating user feedback into the development process, any AI can address concerns and improve its performance.

Continuous Improvement or the Japanese term, Kaizen is the key to giving better output from an AI system. Organisations focusing on providing AI services or Intelligence-as-a-Service (I-a-a-S) need to be super vigilant and careful that such inaccuracies and biases do not creep into their training data.

In the next couple of years we can expect AI systems to be super powerful and would have permeated many core areas of human existence, enterprise, services and development particularly the environmental, educational, energy, utilities, biotech and healthcare systems. With proper training data from authorised sources and correction to remove any bias in the training data, right at the source, we can ensure the AI system does not output erroneous and biased results.

George..

No comments:

Post a Comment